Home Battery System in 2027

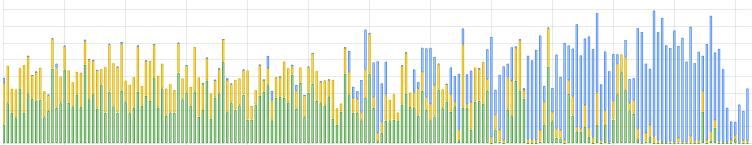

Welcome to the year 2027. The year where the net metering policy in the Netherlands stopped. This post is about owning a home battery system in 2027 onwards and the benefit it has financially, based on minute precise real-world usage- and solar data collected in 2024. In my previous blogpost about the Sessy Home Battery Experience, owning a home battery + dynamic contract resulted in €161.42 more annual profit than without a home battery and a fixed price contract....