In the Mendix Cloud we run thousands of Mendix apps on Cloud Foundry on AWS. Mendix Runtime Engines that currently run in 2, 4, 8 or 16 GB memory containers. Mendix developers have the possibility to start, stop, scale and upload new versions of their app themselves via our Developer Portal.

This results in the fact that we must have diego-cell instances with at least 16 GB memory available at all times so that a Mendix developer can start their 16 GB memory Runtime Engine.

We found out the way Diego schedules LRPs (Long-Running Processes) on diego-cell EC2 instances can be more optimal in our usecase. In Diego there is only one scheduling algorithm. In a nutshell, app instances (LRPs) get deployed to a diego-cell with most resources available. This way app instances get balanced across diego-cell instances equally.

Nima and Jen did a really nice presentation during the last Cloud Foundry Summit in The Hague about how scheduling in Cloud Foundry works.

Let’s say you have a number of AWS EC2 m5.4xlarge (64 GB memory) diego-cell instances. At some point all diego-cell instances are filled up equally with app instances (LRPs) and all diego-cell instances have about 16 GB memory available. At some point this gets to 14~15 GB memory available. Then we have to add additional diego-cell instances to keep supporting the deployment of 16 GB memory Mendix Runtime Engines. But.. when deploying more app instances (LRPs) after scaling up, they get scheduled to the new diego-cell instances, also when they are 2, 4 or 8 GB app instances, until all diego-cell instances have ~16 GB available again.

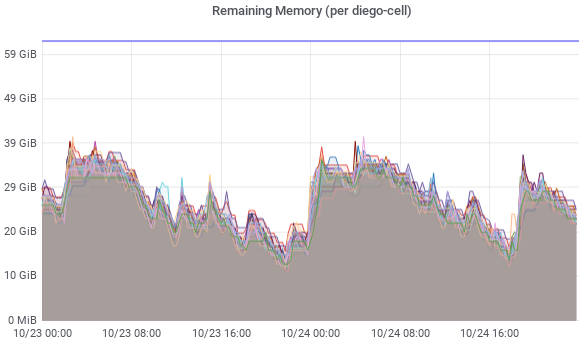

In practice it looks like this (20 diego-cell instances, 64GB memory):

Result: 25% of the memory of our diego-cell instances is unused, wasted.

Now we could scale up to AWS EC2 m5.8xlarge (128 GB memory), so we only waste 12.5%, but at some point we also want to support app instances with 32 GB memory.

We have looked into isolation segments. Having for example an isolation segments per app instance size. Unfortunately that does not work for us. Mendix developers don’t notice this because its abstracted away for them, but they run different app instance sizes in one “Org” and “isolation segments” apply to an “Org”.

The quest to a new scheduling algorithm

I’ve been looking at this inefficient usage of resources for quite a while now. I also investigated how the scheduling algorithm in Diego works before Nima and Jen gave the presentation. During the Cloud Foundry Summit I had a chat with Nima if it would make sense to invest time in adding or changing the scheduler in Diego. Project Eirini was close to a version 1.0 release, where app instances run on Kubernetes. Kubernetes is more flexible with scheduling algorithms. So that could solve our issue as well.

First I thought: “No, let’s wait for Eirini.” But it would probably still take a year before we would migrate to Eirini in production. Having an improved scheduler in Diego would mean a cost saver for us right now.

Goal of the new scheduling algorithm

Mendix apps are memory heavy. In a shared environment, with running many Mendix Runtime Engines on one Cloud Foundry diego-cell instance, we notice that there is more then enough CPU resources available. Mendix developers mainly scale up their app by adding more memory (or adding more instances). So in our case we want to fill up diego-cell instances as much as possible.

How scheduling LRPs in Diego works technically

Like Nima explained in the presentation, the scheduler makes a decision where to deploy an app instance (LRP) based on a score the diego-cell instances provide. The lowest score wins. The score is calculated here:

- https://github.com/cloudfoundry/auction/blob/f5726c4/auctionrunner/cell.go#L46

- https://github.com/cloudfoundry/rep/blob/a4b681f/resources.go#L115

- https://github.com/cloudfoundry/rep/blob/a4b681f/resources.go#L209

It basically drills down to:

Score = ((Memory + Disk + Containers) / 3) + StartingContainers + Locality

- Memory: percentage of memory that is still available

- Disk: percentage of disk that is still available

- Containers: percentage of containers it still can host (max 256 per diego-cell)

- StartingContainers: number of starting containers x weight (usually 0.25)

- Locality: 1000 when its already hosting an instance of the same app

For example:

((0.5 + 0.5 + 0.39) / 3) + 0.25 + 0 = 0.7133

- Memory: diego-cell has 50% of its memory available

- Disk: diego-cell has 50% of its disk available

- Containers: diego-cell runs 100 containers (100/256)

- StartingContainers: there is currently 1 container starting

- Locality: this diego-cell does not run an instance of the same app

The idea: Bin Pack First Fit Weight

Scaling up and down the number of diego-cell instances is based on the index number BOSH assigns to an instance. When you add 1 diego-cell instance and after that remove 1 diego-cell instance the instance that was just created gets removed.

What if we could make a diego-cell more attractive to deploy to based on the index number it has. This way diego-cell instances with a lower index number could be filled up first. As long as it has enough resources available. This could be called Bin Pack First Fit.

The index number can be displayed using the “bosh instances” command:

$ bosh -d cf instances -i --column=instance --column=index

Instance Index

[..]

diego-cell/0342c42b-756e-4951-8280-495261e38f53 0

diego-cell/16be34ce-bd34-4837-8431-51f6bc4a0fa8 1

diego-cell/e3bec1d3-0899-4502-9f43-4049f53721b1 2

diego-cell/2581addf-4f08-421e-ab9d-c52772f50315 3

[..]

Like with “StartingContainers“, we could add some weight to the total score based on the index number a diego-cell instance has. This way it is also still possible to completely disable the Bin Pack First Fit weight component in the algorithm by setting the weight to 0 and keep the existing algorithm Diego has currently.

It will work like this:

Score = ((Memory + Disk + Containers) / 3) + StartingContainers + Locality + Index

- Memory: percentage of memory that is still available

- Disk: percentage of disk that is still available

- Containers: percentage of containers it still can host (max 256 per diego-cell)

- StartingContainers: number of starting containers x weight (usually 0.25)

- Locality: 1000 when its already hosting an instance of the same app

- Index: BOSH index number x weight

Let’s take the previous example, assume all diego-cell instances are filled up equally and add an index weight of 0.25:

- diego-cell 0: ((0.5 + 0.5 + 0.39) / 3) + 0.25 + 0 + (0*0.25) = 0.7133

- diego-cell 1: ((0.5 + 0.5 + 0.39) / 3) + 0.25 + 0 + (1*0.25) = 0.9633

- diego-cell 2: ((0.5 + 0.5 + 0.39) / 3) + 0.25 + 0 + (2*0.25) = 1.2133

- diego-cell 3: ((0.5 + 0.5 + 0.39) / 3) + 0.25 + 0 + (3*0.25) = 1.4633

In this case the next app instance will be deployed to diego-cell 0. Exactly what we want. The weight, 0.25 currently, can be increased to make diego-cell instances with a lower BOSH index number even more attractive.

A Proof of Concept

As a Proof of Concept the above has been developed in Diego: https://github.com/pommi/diego-release/tree/bin-pack-first-fit

To test the updated scheduling algorithm, while this is not part of the official diego-release (yet), we create a custom diego-release and use that in our Cloud Foundry setup.

NOTE: this diego-release is based on diego-release v2.34 (cf-deployment v9.5)

$ git clone --recurse-submodules --branch bin-pack-first-fit https://github.com/pommi/diego-release.git

$ cd diego-release

$ bosh --sha2 cr --timestamp-version --tarball=diego-release-bin-pack-first-fit-v2.34.0-5-g0b5569154.tgz --force

Upload diego-release-bin-pack-first-fit-v2.34.0-5-g0b5569154.tgz somewhere online and create an ops file to deploy this diego-release version instead of the default one:

- type: replace

path: /releases/name=diego

value:

name: diego

url: https://<your-domain.tld>/diego-release-bin-pack-first-fit-v2.34.0-5-g0b5569154.tgz

sha1: <sha1 of diego-release-bin-pack-first-fit-v2.34.0-5-g0b5569154.tgz>

version: <version from the `bosh --sha2 cr` command>

- type: replace

path: /instance_groups/name=scheduler/jobs/name=auctioneer/properties/diego/auctioneer/bin_pack_first_fit_weight?

value: 0.25

The result: Weighted Bin Pack First Fit

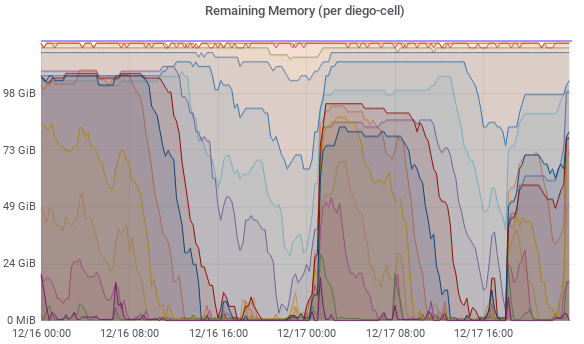

The result is actually pretty amazing 🙂 (15 diego-cell instances, 128GB memory):

This graph shows a 48 hour period, where the deployment pattern of Mendix app instances is equal to the previous graph. It is definitely noticeable that the added “Bin Pack First Fit Weight” has impact. App instances (LRPs) are not spread equally anymore. In this case we could remove 2 or 3 diego-cell instances, while keeping at least 2 to 3 diego-cell instances with 16 GB memory available. 😀

And the cost saver? An AWS On-Demand EC2 m5.4xlarge instance costs around $18.432 per day in AWS region us-east-1. Let’s say you run 100 diego-cell instances in total and you could now remove 20 to 25, while keeping 16 GB memory available on at least a couple of diego-cell instances. That is a saving of $368.60~$460.80 per day, $134,553.60~$168,192.00 per year of On-Demand EC2 costs. 😎 (With Reserved or Spot Instances this is of course less)

We’re hiring

Want to join our team and work on cool stuff like this? Apply for a job at Mendix: